In June 2025, Tencent unveiled Hunyuan-A13B, a groundbreaking open-source large language model (LLM) that combines cutting-edge performance with remarkable efficiency. Built on a fine-grained Mixture-of-Experts (MoE) architecture, Hunyuan-A13B boasts 80 billion total parameters, with only 13 billion active during inference.

This design offers a powerful yet computationally efficient solution for researchers and developers operating under resource constraints.

🚀 Introducing Hunyuan-A13B, our latest open-source LLM.

— Hunyuan (@TencentHunyuan) June 27, 2025

As an MoE model, it leverages 80B total parameters with just 13B active, delivering powerful performance that scores on par with o1 and DeepSeek across multiple mainstream benchmarks.

Hunyuan-A13B features a hybrid… pic.twitter.com/8QTT547fcC

Hunyuan-A13B represents Tencent’s commitment to advancing AI research and development by providing a high-performance, open-source LLM that is accessible to a broad audience. The model’s innovative architecture and efficient design make it an ideal choice for various applications, from academic research to enterprise solutions.

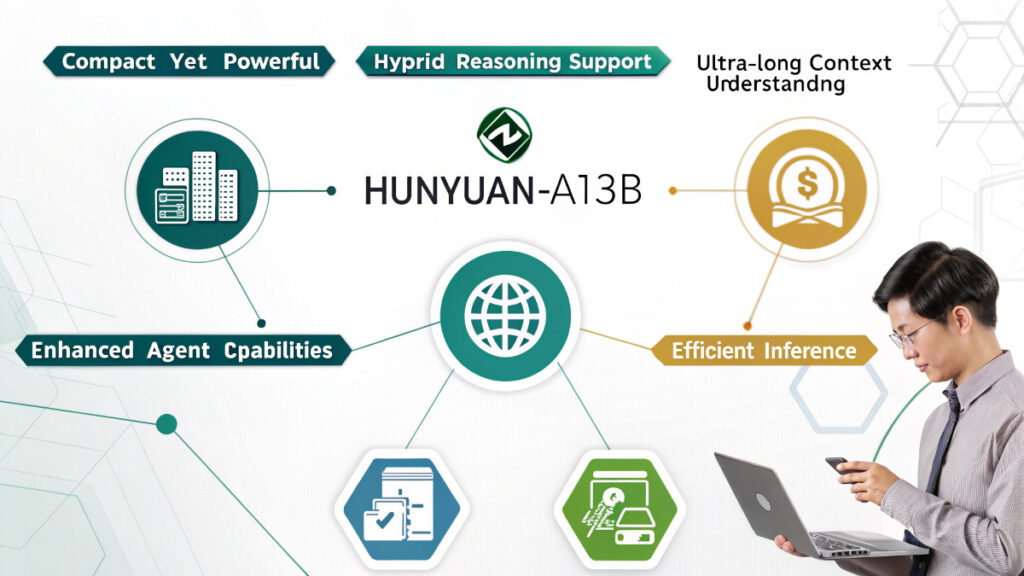

Key Features and Advantages

1. Compact Yet Powerful

Despite having 80 billion total parameters, Hunyuan-A13B activates only 13 billion during inference. This selective activation allows the model to deliver performance comparable to much larger models while significantly reducing computational overhead.

2. Hybrid Reasoning Support

Hunyuan-A13B supports both fast and slow thinking modes, enabling users to choose the appropriate reasoning approach based on their specific needs. This flexibility enhances the model’s applicability across various tasks.

3. Ultra-Long Context Understanding

The model natively supports a 256K context window, maintaining stable performance on long-text tasks. This capability is particularly beneficial for applications requiring the processing of extensive documents or conversations.

4. Enhanced Agent Capabilities

Optimized for agent tasks, Hunyuan-A13B achieves leading results on benchmarks such as BFCL-v3, τ-Bench, and C3-Bench. These enhancements make it well-suited for developing intelligent agents and automation tools.

5. Efficient Inference

Utilizing Grouped Query Attention (GQA) and supporting multiple quantization formats, including FP8 and Int4, Hunyuan-A13B enables highly efficient inference. This efficiency allows the model to run effectively on mid-range GPUs, broadening its accessibility.

Architecture and Technical Specifications

Hunyuan-A13B’s architecture is designed to balance performance and efficiency:

- Mixture-of-Experts (MoE) Architecture: The model comprises 1 shared expert and 64 non-shared experts, with 8 experts activated per forward pass.

- Layers and Activations: It includes 32 layers and employs SwiGLU activation functions, contributing to its computational efficiency.

- Vocabulary Size: The model supports a vocabulary of 128K tokens, accommodating diverse language processing tasks.

- Grouped Query Attention (GQA): GQA enhances memory efficiency during long-context inference, supporting the model’s 256K context length.

- Quantization Support: Hunyuan-A13B supports multiple quantization formats, including FP8 and Int4, facilitating deployment in resource-constrained environments.

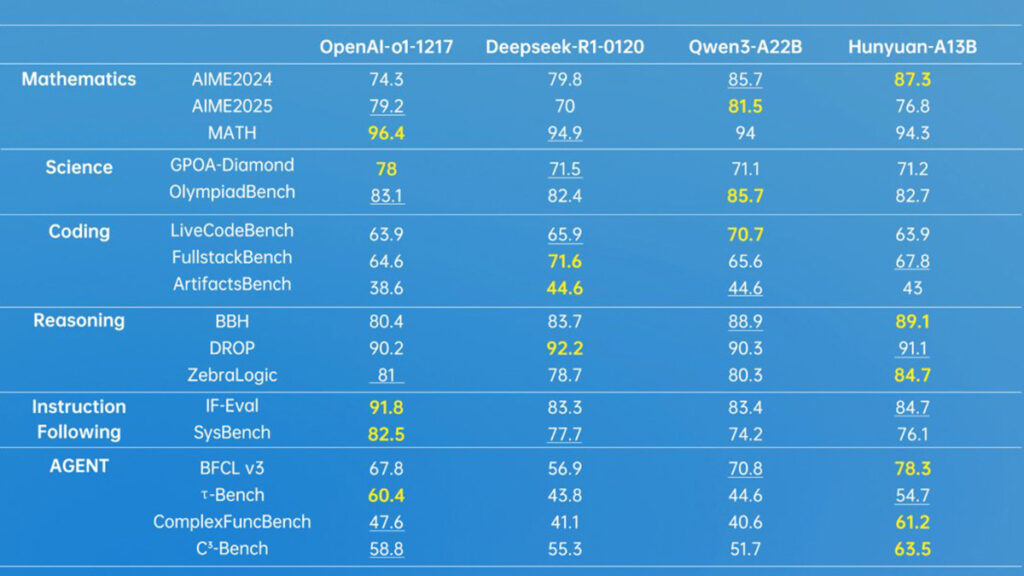

Benchmark Performance

Hunyuan-A13B-Instruct has demonstrated highly competitive performance across various benchmarks:

Mathematics and Science

- MATH: Achieved a score of 72.35, outperforming several larger models.

- GSM8k: Scored 91.83, indicating strong capabilities in grade-school math problem-solving.

Reasoning and Instruction Following

- BBH: Achieved 87.56, showcasing robust reasoning abilities.

- IF-Eval: Scored 84.7, reflecting effective instruction-following performance.

Coding and Agent Tasks

- MBPP: Achieved 83.86, indicating proficiency in code generation tasks.

- BFCL v3: Scored 78.3, demonstrating enhanced agent capabilities.

Use Cases and Applications

Hunyuan-A13B’s design and capabilities make it suitable for a wide range of applications

- Academic Research: Its open-source nature and efficient performance make it an excellent tool for researchers exploring natural language processing and AI.

- Enterprise Solutions: Businesses can leverage Hunyuan-A13B for developing intelligent agents, customer service bots, and other AI-driven applications.

- Educational Tools: The model’s proficiency in mathematics and instruction-following tasks makes it ideal for creating educational content and tutoring systems.

- Content Creation: With its strong performance in text generation, Hunyuan-A13B can assist in drafting articles, reports, and creative writing.

Getting Started with Hunyuan-A13B

To explore and utilize Hunyuan-A13B, follow these steps:

- Access the Model: Visit the GitHub repository to access the model’s code and documentation.

- Explore Pretrained Versions: Check out the pretrained models available on Hugging Face for immediate use.

- Review Documentation: Read the provided technical reports and manuals to understand the model’s capabilities and operational guidelines.

- Deploy the Model: Utilize the model in your applications, taking advantage of its efficient inference and robust performance across various tasks.

Conclusion

Hunyuan-A13B stands out as a powerful, efficient, and accessible large language model. Its innovative architecture and impressive benchmark performance make it a valuable asset for researchers, developers, and businesses alike. By open-sourcing Hunyuan-A13B, Tencent has contributed significantly to the AI community, fostering innovation and collaboration.